RealValue: Our Sweet Algorithmic Suite

Every agency seems to have proprietary algorithms for optimizing ad buying, so perhaps it’s not surprising Goodway has an algorithmic suite too. What’s different about us though is we don’t hide behind buzzwords – we lay out more precisely what we’re doing and then back it up with cold, hard experimental data that proves our algorithms significantly improve performance.

In a previous post, we described our base bid reduction algorithm. Here, we describe our bid-factoring algorithm, which uses a mathematical model to determine how much more or less to bid on any particular inventory segment. For example, suppose a media trader notices users on the Chrome browser are converting at a higher rate. She might then increase how much the ad group bids on Chrome in order to get more of that inventory. What we’ve done is automate that process: For every programmatic campaign, and every inventory dimension, we calculate the mathematically optimal amount to increase bids.

The incredulous marketer might ask themselves, why aren’t they using machine learning to do this? Surely machine learning is better? Actually, no: Machine learning is used to make predictions. What we’re doing is using fancy math to calculate the exact optimal. You don’t need a deep neural network when you can calculate the right answer outright.

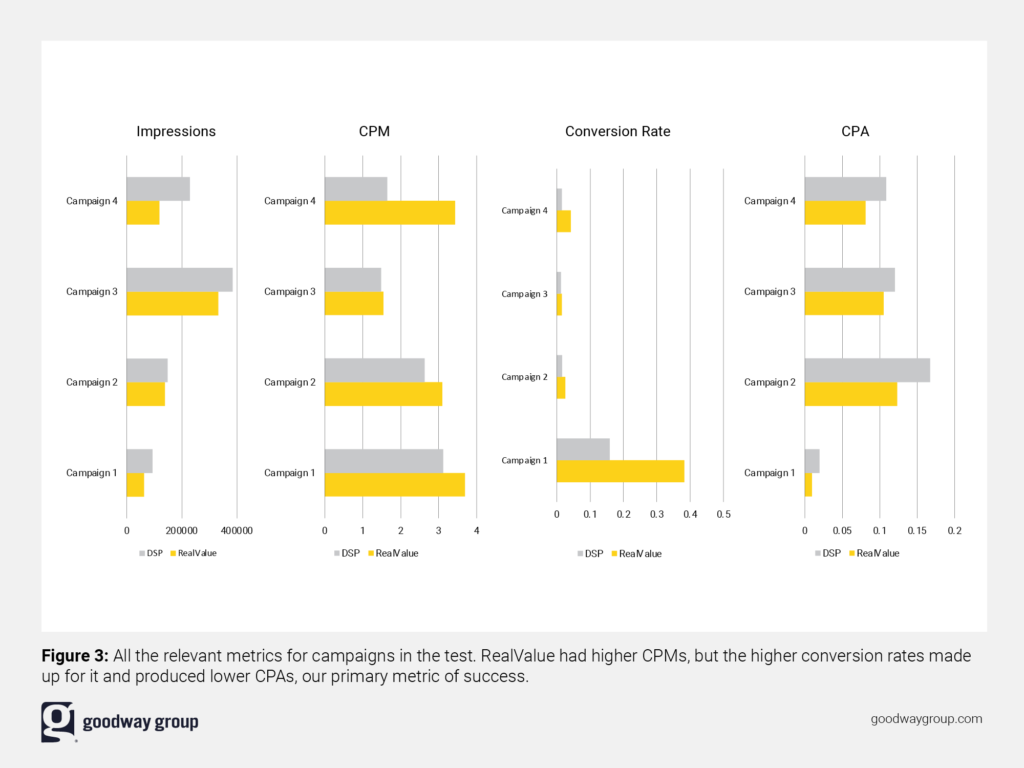

One reasonable objection though is we'll hurt the downstream cost-per-acquisition (CPA) metric since we’re now bidding more for high-value inventory. While counterintuitive, experimental results show the benefit of more conversions outweighs the cost of paying more for them.

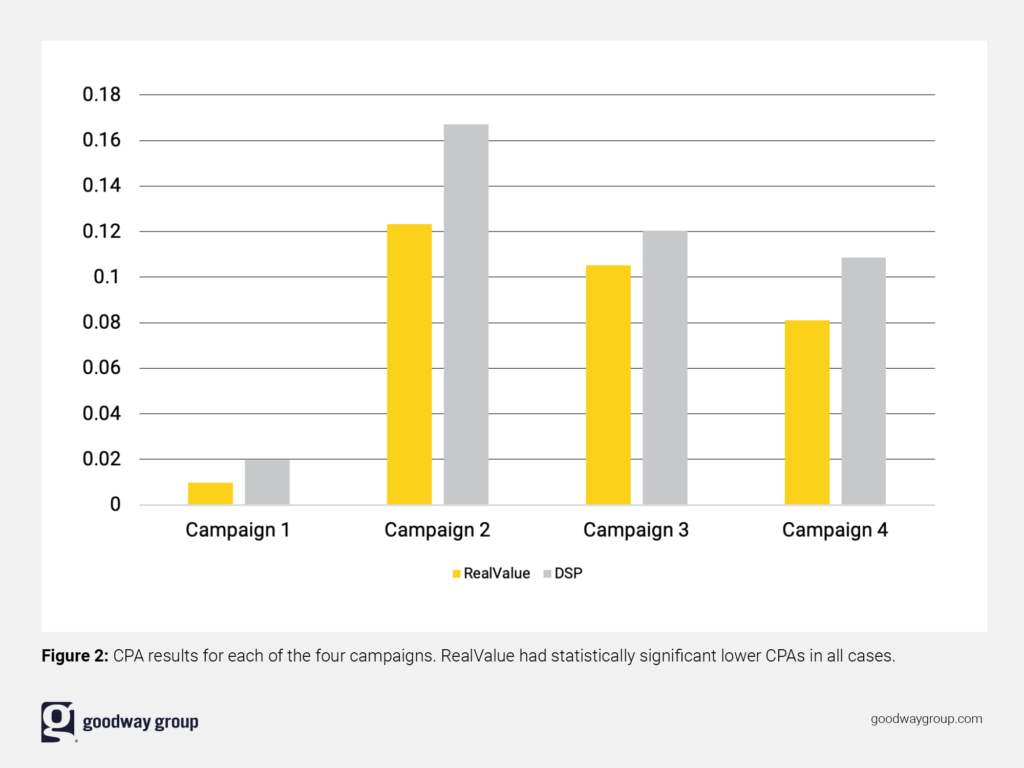

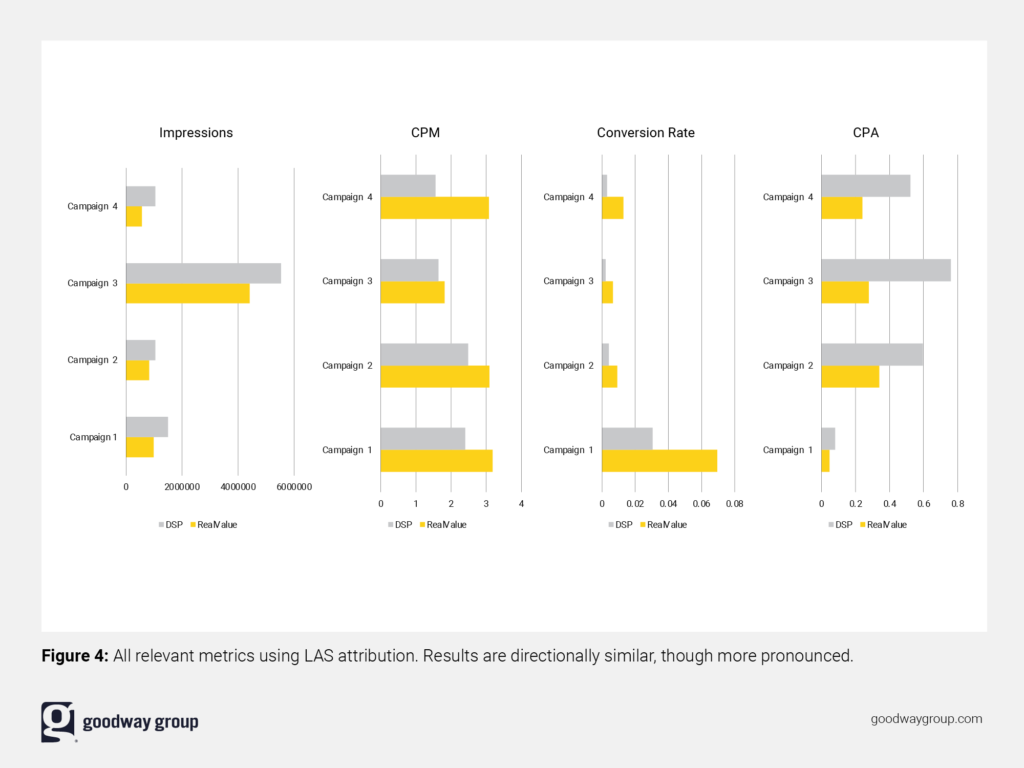

In the next section, we describe a recent AB test we ran that shows our homegrown bid-factoring algorithmic suite produced a 36% lower cost per acquisition (CPA) with multi-touch attribution (MTA) and 63% lower CPA with last-ad-seen attribution (LAS). Just a heads up – it gets nerdy past this point.

Experimental Setup

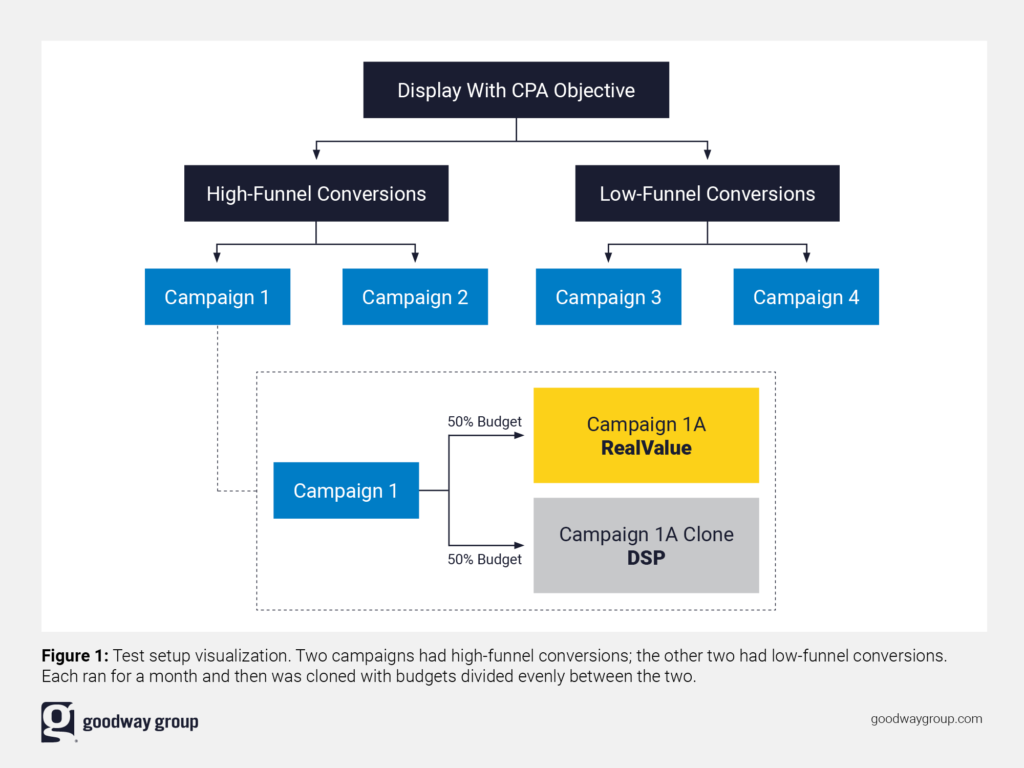

We took four display campaigns (two with high-funnel conversions and two with low-funnel conversions) that had been running for at least a month. We cloned each campaign and split budgets down the middle (50% to one, 50% to the other). We used segmented audiences to ensure users only landed in one or the other campaign.

For the control campaign, we applied our RealValue bid factor optimizations, and for the test campaign, we applied the demand-side platform’s (DSP’s) out-of-the-box algorithmic optimizations. The test ran during the month of April 2022. But note since RealValue uses MTA and the DSP’s algorithm uses LAS, we went ahead and calculated and compared both attribution models.

For brevity, we’ve omitted all the finer campaign setup details.

Results

After the month-long test and another two weeks for conversions to accrue, we calculated the MTA CPA and LAS CPA for each campaign. In all four tests and with both attribution models, the RealValue bid-factoring algorithmic suite had a statistically significant lower CPA.

RealValue did have higher CPMs for these campaigns, but the increase in higher-converting inventory still resulted in lower CPAs as seen in Figure 3.

Despite the DSP’s algorithm using a LAS attribution model to determine its optimizations, we found the benefits of RealValue were even more pronounced in this alternative attribution model.

Conclusion

We admit four campaigns represent too small a sample to make sweeping generalized claims about our algorithmic superiority in digital advertising. However, this test provides compelling evidence that our bid-factoring algorithm suite provides real value to our clients.